ReVISit 2 Paper Wins Best Paper Award at IEEE VIS

IWe’re happy to share that our paper on ReVISit 2 received a Best Paper Award at IEEE VIS 2025. ReVISit 2 is an open framework for designing, deploying, and disseminating browser-based visualization studies across the full experiment life cycle. In this post, we summarize the contributions of the paper, describe the replication studies that demonstrate the system’s capabilities, reflect on feedback from users, and outline how reVISit can support more reproducible and expressive experimental research.

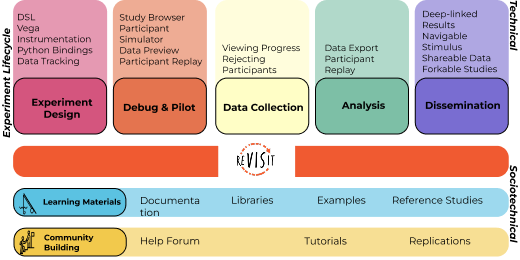

Running online visualization studies is now standard practice in VIS and HCI research. Yet the process remains fragmented: researchers stitch together survey tools, custom web code, logging scripts, analysis pipelines, and ad hoc debugging workflows. Users of reVISit already know this story: reVISit consolidates this ecosystem into a single open framework that supports the entire experiment life cycle – from design to dissemination.

To inform the academic community about the new developments in reVISit 2 – which we already described in these blog posts we wrote an academic paper about it.

Positioning reVISit in the Ecosystem

In the paper, we first situate reVISit among existing study platforms. We compare it to commercial survey systems, domain-specific research tools, and library-based frameworks. While survey platforms excel at rapid deployment, they rarely support sophisticated interaction logging or fine-grained experimental control. Academic tools often address specific domains or slices of the workflow, but lack long-term maintainability or broad adoption.

ReVISit 2 is designed differently: it treats experiment design as programmable infrastructure. A JSON-based domain-specific language (DSL) models sequences, blocks, counterbalancing strategies, interruptions, skip logic, and dynamic control flow. On top of that, reVISitPy provides Python bindings that allow researchers to generate complex study configurations directly from notebooks. The result is a framework that emphasizes expressiveness, reproducibility, and ownership over one’s experimental stack.

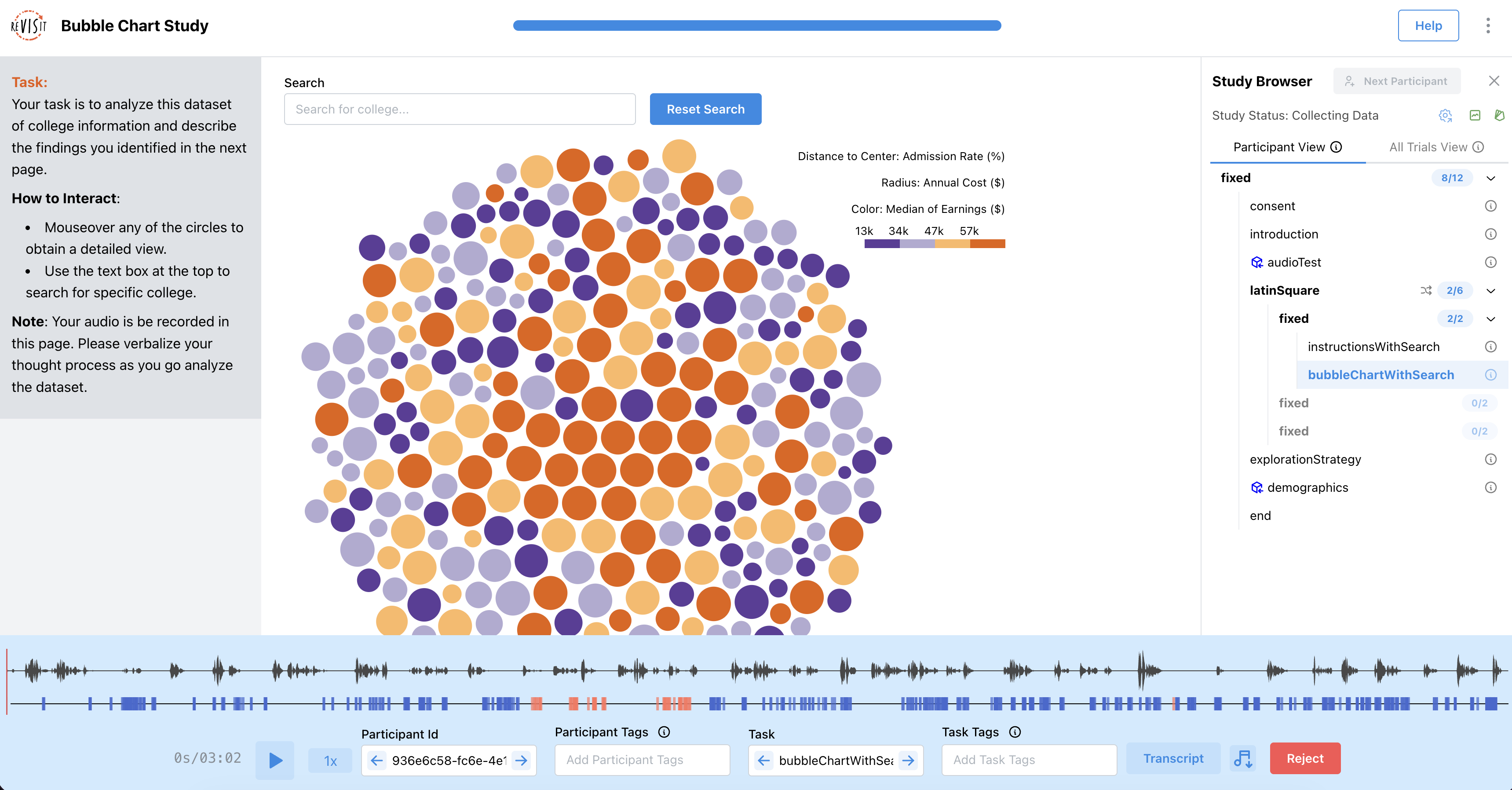

We also describe technical advances in reVISit 2, including first-class Vega support, automated provenance tracking, participant replay, and improved debugging tools such as the study browser. These features aim to tighten feedback loops during piloting while preserving transparency during dissemination.

Putting it to the Test: Replication Studies

To demonstrate that these capabilities are not merely architectural, we conducted a series of replication studies.

Each study highlights a different capability of the system. In one, we implement adaptive and staircase-style designs to evaluate visualizations of correlations using dynamic sequencing logic, showing how complex control flow can be expressed directly in the study configuration. In another, we integrate think-aloud protocols by embedding audio recording and transcription into browser-based experiments, allowing researchers to capture reasoning during interaction rather than only after the fact. Finally, we demonstrate provenance tracking and replay by instrumenting interactive visualizations to capture detailed interaction histories, enabling fine-grained participant replay and qualitative analysis. reVISit 2 also provides deep linking to specific trials or moments in user studies, to aid in dissemination and transparency. For example, this link takes you to the exact state you see in the above image.

Across these replications, we recruited hundreds of participants and reproduced key findings from prior visualization studies. Just as importantly, the studies surfaced practical lessons about counterbalancing, recruitment logistics, and ongoing challenges in deploying sophisticated designs online. The replication work serves as both validation and stress test for the framework, and as real live examples for studies (including the data) for the community to learn from.

Across these replications, we recruited hundreds of participants and reproduced key findings from prior visualization studies. Just as importantly, the studies surfaced practical lessons about counterbalancing, recruitment logistics, and the realities of deploying sophisticated designs online. Together, they function both as validation and as a stress test of the framework. They also remain publicly accessible – complete with study configurations and data – serving as concrete, real-world examples that the community can inspect, reuse, and learn from.

What Users Told Us

We also interviewed experienced reVISit users to better understand how the system performs in practice. Several themes emerged:

- Tighter development loops. Users appreciated the integrated development environment and the study browser, which makes it possible to jump directly to specific trials without stepping through an entire study.

- Expressiveness over convenience. While the DSL requires programming knowledge, users valued the flexibility it affords – especially for mixed designs and adaptive sequencing.

- Learning curve trade-offs. ReVISit inherits complexity from modern web tooling (e.g., React, TypeScript). This can be a barrier for less technical researchers, but it also enables deeper customization and extensibility.

- Open infrastructure. The ability to fork studies, inspect core code, and maintain version stability was frequently cited as a strength, particularly for reproducibility.

Overall, feedback confirmed that reVISit is most effective for technically oriented research teams who need more than a survey builder.

Recognition at IEEE VIS

We were very honored to receive an IEEE VIS Best Paper Award for this work. Zach Cutler presented the paper on the main stage in Vienna.

This recognition reflects years of iterative development, community feedback, tutorials, documentation work, and, most importantly, the researchers who have trusted ReVISit in their own studies.

ReVISit 2 is not the endpoint. It is infrastructure for a research community that increasingly relies on sophisticated, reproducible, browser-based experiments. We look forward to continuing to build it together.

The Paper

This blog post is based on the following paper:

ReVISit 2: A Full Experiment Life Cycle User Study Framework

IEEE Transactions on Visualization and Computer Graphics (VIS), 2026

IEEE VIS 2025 Best Paper Award